What is MCP in AI?

What you should know about the Model Context Protocol is Building the “Babylon Tower” of LLM.

An LLM by itself is dumb. How can we connect the APIs smartly? Can we scale it?

The Model Context Protocol (MCP) is a layer between the service and the tools. It’s a standard that simplifies how large language models (LLMs) access various data sources. Developed by Anthropic, MCP solves the problem of complex integrations through standardised communication, making AI development faster and smoother by utilising AI-powered tools. Additionally, MCP is designed to enhance the functionality and integration of a new generation of AI tools, indicating a significant advancement in programming language capabilities and developer toolsets. This article explores what MCP is, its benefits, and how to implement it.

Imagine you have a robot for painting, musing, and building stories. Your API is upgraded. You can not paint anymore. MCP will remove integration efforts. This is where InvestGlass is heading its research now.

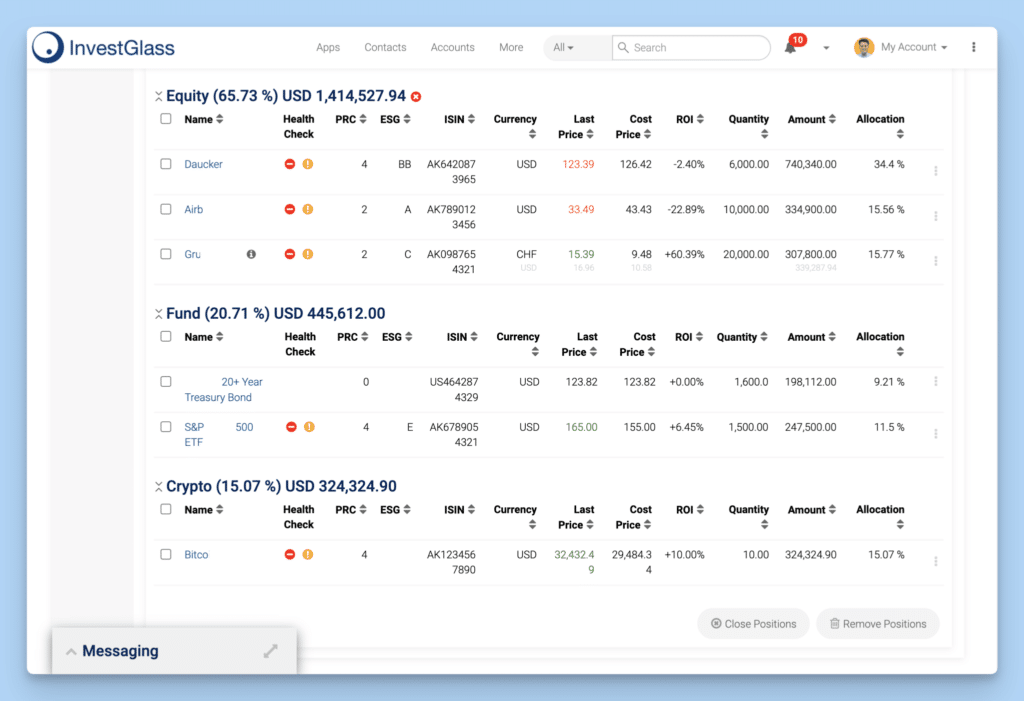

InvestGlass offers a Swiss-made CRM and client portal tailored for banks, financial advisors, and fintech firms, featuring automation tools for onboarding, KYC, portfolio management, and communication. It streamlines compliance and client engagement while ensuring data privacy with on-premise and cloud hosting options.

Key Takeaways – MCP is AI an API for LLMS – and InvestGlass future

- The Model Context Protocol (MCP) standardises context provision for large language models, facilitating seamless integration and reducing development time for AI applications.

- MCP’s client-server architecture enhances communication between AI applications and data sources, significantly simplifying integration and increasing interoperability.

- Adopting MCP improves workflow efficiency for developers by eliminating custom integration needs, offering flexibility in programming environments, and allowing focus on innovation.

Understanding the Model Context Protocol (MCP)

The Model Context Protocol (MCP) serves as a transformative benchmark in AI system development, offering more than just a conventional technical norm. Established by Anthropic, the MCP creates uniformity for supplying context to sizeable linguistic models, promoting effortless incorporation between AI systems and diverse data sources. By enabling direct links to connect AI models across an array of clients and resources, this protocol effectively consolidates interactions and substantially cuts down on development durations. MCP acts like a USB-C port, serving as a standardized interface for connecting AI models to various data sources and tools, enhancing interoperability and efficiency. Developers are eagerly embracing MCP due to its promise of unleashing the full potential of AI while removing traditional obstacles encountered during development.

Fundamentally, MCP exists as an open protocol that orchestrates seamless communication across AI applications and various data sources through a regulated framework for exchanging messages and message structuring. This advancement not only promotes compatibility but also streamlines the integration procedure — liberating developers from dealing with piecemeal integrations so they can devote their efforts toward creating pioneering tools instead.

Embracing MCP equips developers with robustly scalable platforms designed for future complexity management within artificial intelligence systems — anchoring them firmly in anticipation of forthcoming developments in technology.

LLM are going to be more capable – Antropic is building a standard and we suggest you to be careful as this is still not a fixed standard.

Core Concepts of MCP

The Model Context Protocol (MCP) is composed of two principal elements: servers and clients within the MCP framework. The role of an MCP server is to facilitate requests, granting access to a variety of external tools or data sources as needed, while the function of an MCP client lies in soliciting resources and handling data processing tasks. This division into client-server architecture plays a pivotal role in creating standardized channels for AI applications to communicate with different data providers, promoting streamlined integration and minimizing reliance on bespoke solutions.

At the heart of its operation, MCP utilizes a protocol layer responsible for regulating activities such as message structuring and associating inquiries with their respective responses. By adopting JSON-RPC 2.0 for messaging purposes, it guarantees orderly communication that adheres to established formats. During the initial negotiation phase, clients must communicate their supported protocol version to the server, which then responds accordingly, allowing for a tailored interaction based on the capabilities defined in that version.

MCP enhances versatility suitable for assorted development requirements by providing Software Development Kits (SDKs), which are compatible across various programming environments. Through this uniform approach, not only does it streamline how distributed data sources are managed, but it also bolsters the construction process behind intricate workflows, contributing significantly towards refining service efficiency among AI endeavours while establishing itself as a dependable resource provider.

Is Manis AI the Real Deal or Just Overhyped Automation?

Manis AI is hours and hours of work – without MCP it will be very difficult to maintain. MCP benefits are pretty simple. Bringing all the MCP servers together will be a great progress.

The adoption of MCP brings the considerable benefit of negating the requirement for distinct integrations across different AI services. MCP’s communication protocol is standardised, promoting greater interoperability and enabling a swifter, more streamlined integration process for various AI platforms. Such an enhancement in interoperability proves to be especially valuable within modern development environments where maximizing time efficiency and resource utilization is paramount.

MCP affords developers substantial flexibility. They can utilize their preferred programming languages and technology stacks while implementing this protocol, ensuring that they can effectively apply their current expertise and tools.

Cumulatively, these benefits lead to a marked improvement in workflows related to development projects by fostering smoother operations and heightened productivity levels. By incorporating MCP into their processes, developers gain the ability to focus on creative innovation and solving complex problems without being impeded by challenges associated with integration.

How MCP Works?

MCP utilizes a client-server model that streamlines the way in which applications deliver context and tools to substantial language models (LLMs). Within this framework, MCP clients handle the tasks of requesting resources and processing data. On the other side, MCP servers serve as facilitators, overseeing these requests and granting access to external tools or sources of data. This structure eases integration efforts and diminishes reliance on tailor-made solutions, freeing developers to concentrate on devising cutting-edge AI applications.

To amalgamate their data with AI instruments within MCP, developers can either make their data available via MCP servers or create dedicated MCP clients. This methodology greatly alleviates the intricacies involved in incorporating an array of AI services, ensuring fluid interactions between AI systems and databases. Employing MCP aids in forging consistent and effective AI systems poised to satisfy contemporary needs in AI development, especially for distributed teams working across different locations.

Message Types in MCPww

MCP manages various message types to facilitate communication between clients and servers. The primary message types in MCP include Requests, Results, Errors, and Notifications. Requests are initiated by MCP clients and require a response to indicate successful processing. Results represent successful responses to Requests, confirming that the requested operation has been completed.

On the other hand, errors signify a failed request, indicating that the operation could not be completed. Notifications are designed as one-way communications that do not require an answer, providing status updates without expecting a response.

Defining message types clearly and implementing message structuring ensures reliable and structured communication between AI applications and data sources, enhancing system performance.

Transport Mechanisms it’s all about the protocol

MCP accommodates data interchange through several transport protocols, tailored to meet the needs of distinct development settings. When operating locally, MCP leverages stdio for uncomplicated inter-process communication. Conversely, in scenarios involving distributed teams, MCP advocates using HTTP combined with Server Sent Events (SSE) to facilitate immediate data transmission between various external systems.

In all forms of communications within MCP, the JSON-RPC 2.0 message format is employed to guarantee a structured and uniform method of data exchange that aligns well with numerous programming contexts. The adoption of these varied transport mechanisms endows MCP with the flexibility required for efficient application across a range of developmental landscapes—ranging from individual local tests to expansive distributed system integrations.

Setting Up MCP Servers

Establishing model context protocol servers necessitates the arrangement of environment variables to designate configurations and initiating the server with the use of the hype command. Although one can incorporate MCP servers via the user interface, this approach is usually discouraged because it may introduce complexities. Should you add a server through the UI, be sure to perform a refresh in order to access available tools.

For developers embarking on setting up their own mcp servers, there are numerous sample servers at their disposal that serve as starting points. Toolkits tailored for deploying remote production-level MCP servers are expected to become available in due course.

Configuration File Format

The configuration files of MCP employ a JSON format that supports the use of nested objects and arrays, enabling the depiction of intricate settings. This systematic methodology guarantees that configurations are accessible for reading and interpretation by humans while also being compatible with machine parsing, thereby simplifying the process for developers to oversee and adjust settings when required.

Deployment Locations

To achieve the best performance, it is essential that MCP configuration files are located in directories that the server can easily access. This placement allows the server to use these configurations efficiently, resulting in more stable operations and enhanced performance.

It’s critical to position these files within directories tailored for particular scenarios to ensure they are leveraged properly, especially when integrating various AI tools with each data source.

Integrating MCP with InvestGlass AI

Incorporating MCP into InvestGlass AI systems provides developers the capability to establish protected, bidirectional links between data sources and AI instruments. Legacy systems create barriers for sophisticated AI models by trapping them behind information silos and preventing seamless access to crucial data. By doing so, it streamlines the workflow and dismantles barriers across data repositories, fostering a unified setting for the deployment of AI solutions. The standards set by MCP for interaction and exchanging information diminishes the complexity involved in integration processes, facilitating fluid interactions between external data points and AI-powered applications.

MCP is beneficial regardless of whether one is engaged with versatile InvestGlass AI helpers or crafting cross-platform AI software. It improves integration quality and the effective utilization of disparate information sets. Consequently, this not only amplifies efficiency within operations but also paves the way for novel methodologies in applying artificial intelligence creatively.

Using Claude Desktop with MCP

Initiating the use of Claude Desktop alongside MCP involves first acquiring the desktop application and making adjustments to the claude_desktop_config.json file. The essential step post-installation is customizing this configuration file to meet specific integration requirements. Subsequently, Claude Desktop has the capability to form a graphical representation with nodes and edges signifying connections once it gains authorization for access.

The task of discerning server status is made simple by utilizing the interface provided by Claude Desktop, which exhibits both linked servers and their respective accessible resources. This setup empowers developers by allowing them to efficiently employ Model Context Protocol (MCP) in order to establish connectivity between AI models, thereby aiding in the advancement of AI applications.

Developing Custom Integrations

The MCP SDK facilitates the creation of seamless custom integrations within MCP by offering support for Python and TypeScript. This empowers developers to utilize MCP’s capabilities with ease in their chosen development environments, streamlining the process for crafting tailored solutions.

Security and Error Handling in MCP

Maintaining strong security and effective error management is key to the smooth functioning of MCP. To boost security, authentication protocols are in place to confirm user identities before allowing access to resources. It’s crucial to check the source of every connection and cleanse incoming messages to eliminate potential weaknesses. The adoption of stringent security prawctices and comprehensive error handling ensures dependable operations while safeguarding confidential data from any compromised data source.

In case of errors within MCP, there is a specific process for propagating them which aids in proper resolution without significantly interrupting communication flows. A set of standard error codes exists, creating a uniform method for recognizing and addressing issues when they arise. This standardised procedure facilitates quick problem-solving efforts while preserving the fidelity of the communication process.

Ensuring Data Security

MCP utilises encryption methods to safeguard data in transit, guaranteeing that confidential information remains protected. For communications involving remote data, TLS encryption offers a strong security barrier.

The protection of detailed information during transmission is crucial for maintaining the integrity of the data source and thwarting any potential security breaches.

Standardised Error Codes

The protocol MCP employs a set of predefined error codes designed for uniform troubleshooting and maintaining consistent error handling. It also allows for creating custom error codes that go beyond the standard selection, providing the ability to manage errors specific to an application. This standardised approach to reporting errors ensures system dependability and performance.

Real-World Applications of MCP

MCP tackles the prevalent issues in AI development, including scattered data links and isolated custom integration pockets. By embracing MCP, developers are equipped to construct more intelligent and expandable AI applications. Entities such as Block and Apollo have effectively integrated MCP within their systems, showcasing its efficacy in boosting operational productivity while also highlighting the tangible advantages of employing this protocol.

Practical instances of MCP servers put into action by Anthropic, external entities, and the broader community underscore both its adaptability and potency. These cases provide a clear picture of how utilizing MCP can simplify AI development while simultaneously enhancing AI application performance throughout an array of sectors.

AI-First Applications

Applications that prioritize AI, including AI assistants and integrated development environments (IDEs), can utilize MCP to improve functionality and streamline processes. Integrating general-purpose AI application assistants into diverse applications increases context recognition and enhances the user experience. Of course, we are looking at InvestGlass to connect with these new concepts, but we are looking to get a standard approved first.

Cross-platform applications that employ MCP are able to standardize AI features, which boosts their overall capabilities.

Scalable AI Services

MCP facilitates advanced distributed processing, which is crucial for overseeing AI workflows effectively as systems expand in scale. MCP’s architecture delivers the necessary flexibility and compatibility to escalate AI services over various platforms. Imagine you could connect all fintech into one click – and with no additional cost, maintain the relations between software!

The standardized methodology that MCP utilizes guarantees streamlined deployment and administration of complex models when managing distributed AI processing.

Troubleshooting and Debugging MCP Servers

Various tools designed for different troubleshooting tiers make diagnosing and fixing issues with MCP servers easier. For example, the MCP Inspector offers immediate insights into server performance, facilitating rapid problem-solving.

This tool’s real-time analysis of server resources and prompt templates greatly improves the ability to oversee MCP servers effectively.

Logging and Diagnostics

The model context protocol is built on a standardized way of handling logs, diagnostics, and overall system integrity, ensuring that servers can connect with data sources and tools securely. In order for the MCP framework to promptly identify and rectify problems, every mcp server must implement robust logging practices. For instance, redirecting logs to standard error serves as a reliable method to prevent any inadvertent interference with protocol operations, thereby preserving the overall stability of model context protocol servers.

By adopting the correct log configurations, you streamline your troubleshooting procedures and uphold the dependability of the entire context protocol. This helps guarantee that LLM applications, clients, and other tools can maintain smooth integration. In turn, this practice helps minimize disruptions to data sources and tools, allowing developers and businesses to start building solutions with assurance that the context remains intact and protected.

Why use MCP Inspector?

The MCP Inspector stands out as an indispensable tool for scrutinising and validating the efficiency of model context protocol servers. Acting as an open protocol component, it reinforces the notion that MCP is a standardised and transparent system designed to encourage broader development involvement. With the MCP Inspector, administrators and developers can swiftly monitor server connections, validate their context consistency, and confirm that any file or data source involved in the process functions without error.

This seamless integration of diagnostic functionalities fosters a collaborative environment between servers, clients, and data sources, preserving the underlying model context. By offering a simplified yet powerful means to connect with and analyse MCP’s internal workings, the MCP Inspector streamlines everything from general inspection to in-depth diagnostics, helping users maintain optimal performance across all LLM applications and tools.

Contributing to MCP

Contributions to MCP, or the Model Context Protocol, form a critical part of this open standard and showcase the protocol’s community-driven spirit. Since MCP is designed to facilitate seamless integration with data sources and tools, community feedback and collaboration are invaluable to its growth and sustained relevance.

Developers, system architects, and enthusiasts alike are encouraged to share their insights by addressing existing issues, adding documentation enhancements, or suggesting new features that advance MCP’s mission as a standardized way to orchestrate interactions among servers, clients, and context. Whether that involves refining prompts, improving log handling methods, or exploring fresh approaches to a two-way process of data exchange, every contribution pushes the model context protocol forward. In doing so, members of the community not only advance this open protocol but also help pave the way for more robust and user-friendly LLM applications.

Community Contributions

Active involvement from the wider community is the cornerstone of ensuring that the Model Context Protocol (MCP) meets evolving needs and consistently provides a standardized means of connecting data sources and tools. By contributing bug fixes, additional documentation, or new functionalities, community members help refine how MCP servers operate, exchange file data, and align with client requirements.

Feedback from diverse backgrounds—whether through GitHub issues, community forums, or discussions on how to best leverage advanced LLM applications—is invaluable to the start building and continued refinement of the context protocol. Sharing direct experiences, coding expertise, or newly discovered techniques further solidifies MCP’s stance as an open protocol framework that embraces collaborative progress. Through this inclusive approach, the MCP project benefits from a collective intelligence, ultimately delivering more efficient diagnostics, enriched log tracing, and refined tools capable of serving a wide spectrum of applications.

Support and Feedback Channels

Inquiries regarding contributions to MCP can be addressed within the community forum. This platform enables developers to obtain assistance from fellow colleagues and specialists in the field. Offering feedback plays an essential role in the evolution of the development process, as it empowers participants to aid in enhancing the protocol.

It is recommended that users put forward their suggestions and ideas for new features by engaging in communal dialogues and utilizing recognized pathways.

Waiting for Godo? Waiting for an Open Standard?

In conclusion, the Model Context Protocol (MCP) offers a standardised way for LLM applications to connect with data sources and tools—from content repositories and databases to host application servers—through a two way connections system. Using MCP servers and clients under an open standard significantly reduces fragmented integrations. This context protocol enables sophisticated models to query, process, and interact with any new data source in a standard protocol, improving how business tools utilize ai powered tools.

Because MCP addresses the need for secure and seamless client connections, developers can rely on open-source project contributions and code to enhance this universal standard. MCP fosters a standardised environment where tools can build advanced solutions without worry through log management, prompts, and real-time file access. By eliminating repeated, fragmented integrations, the model context protocol servers simplify how your data source requires are met while maintaining TLS encryption and business tools security.

With InvestGlass monitoring your MCP-based implementation, you’ll always have expert guidance to procwess and refine connections across all your data sources. We will keep track of every step, ensuring you find the best path forward under this open protocol. By leveraging MCP, InvestGlass helps you integrate sophisticated models and clients in a standardised protocol, paving the way for more efficient, streamlined LLM applications—so you can confidently focus on innovation.w